Home | monotonous.org

In our work on Firefox MacOS accessibility we routinely run into highly nuanced bugs in our accessibility platform API. The tree structure, an object attribute, the sequence of events, or the event payloads, is just off enough that we see a pronounced difference in how an AT like VoiceOver behaves. When we compare our API against other browsers like Safari or Chrome, we notice small differences that have out-sized user impacts.

In cases like that, we need to dive deep. XCode’s Accessibility Inspector shows a limited subset of the API, but web engines implement a much larger set of attributes that are not shown in the inspector. This includes an advanced, undocumented, text API. We also need a way to view and inspect events and their payloads so we can compare the sequence to other implementations.

Since we started getting serious about MacOS accessibility in Firefox in 2019 we have hobbled together an adhoc set of Swift and Python scripts to examine our work. It slowly started to coalesce and formalize into a python client library for MacOS accessibility called pyax.

Recently, I put some time into making pyax not just a Python library, but a nifty command line tool for quick and deep diagnostics. There are several sub commands I’ll introduce here. And I’ll leave the coolest for last, so hang on.

pyax tree

This very simply dumps the accessibility tree of the given application. But hold on, there are some useful flags you can use to drill down to the issue you are looking for:

--web

Only output the web view’s subtree. This is useful if you are troubleshooting a simple web page and don’t want to be troubled with the entire application.

--dom-id

Dump the subtree of the given DOM ID. This obviously is only relevant for web apps. It allows you to cut the noise and only look at the part of the page/app you care about.

--attribute

By default the tree dumper only shows you a handful of core attributes. Just enough to tell you a bit about the tree. You can include more obscure attributes by using this argument.

--all-attributes

Print all known attributes of each node.

--list-attributes

List all available attributes on each node in the tree. Sometimes you don’t even know what you are looking for and this could help.

Implementation note: An app can provide an attribute without advertising its availability, so don’t rely on this alone.

--list-actions

List supported actions on each node.

--json

Output the tree in a JSON format. This is useful with --all-attributes to capture and store a comprehensive state of the tree for comparison with other implementations or other deep dives.

pyax observe

This is a simple event logger that allows you to output events and their payloads. It takes most of the arguments above, like --attribute, and --list-actions.

In addition:

--event

Observe specific events. You can provide this argument multiple times for more than one event.

--print-info

Print the bundled event info.

pyax inspect

For visually inclined users, this command allows them to hover over the object of interest, click, and get a full report of its attributes, subtree, or any other useful information. It takes the same arguments as above, and more! Check out --help.

Getting pyax

Do pip install pyax[highlight] and its all yours. Please contribute with code, documentation, or good vibes (keep you vibes separate from the code).

What We Did

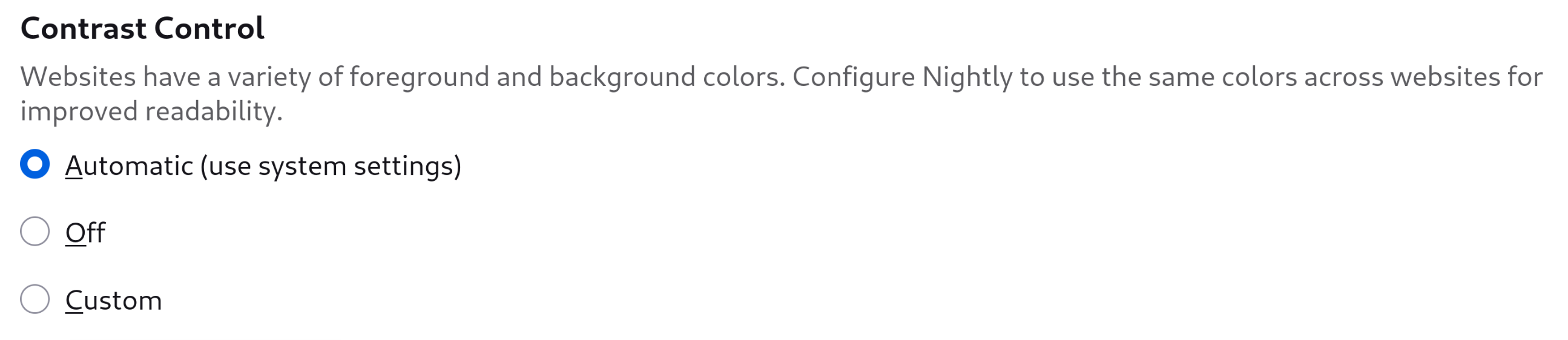

In today’s nightly build of Firefox we introduced a simple three-state toggle for color contrast.

It allows you to choose between these options:

- Automatic (use system settings)

- Honor the system’s high contrast preferences in web content. If this is enabled, and say you are using a Windows High Contrast theme, the web content’s style will be overridden wherever needed to display text and links with the system’s colors.

- Off

- Regardless of the system’s settings the web content should be rendered as the content author intended. Colors will not be forced.

- Custom

- Always force user specified colors in web content. Colors for text and links foreground and background can be specified in the preferences UI.

Who Would Use This and How Would They Benefit?

There are many users with varying degrees of vision impairment that benefit from having control over the colors and intensity of the contrast between text and its background. We pride ourselves in Firefox for offering fine grained control to those who need it. We also try to do a good job anticipating the edge cases where forced high contrast might interfere with a user’s experience, for example we put a solid background backplate under text that is superimposed on top of an image.

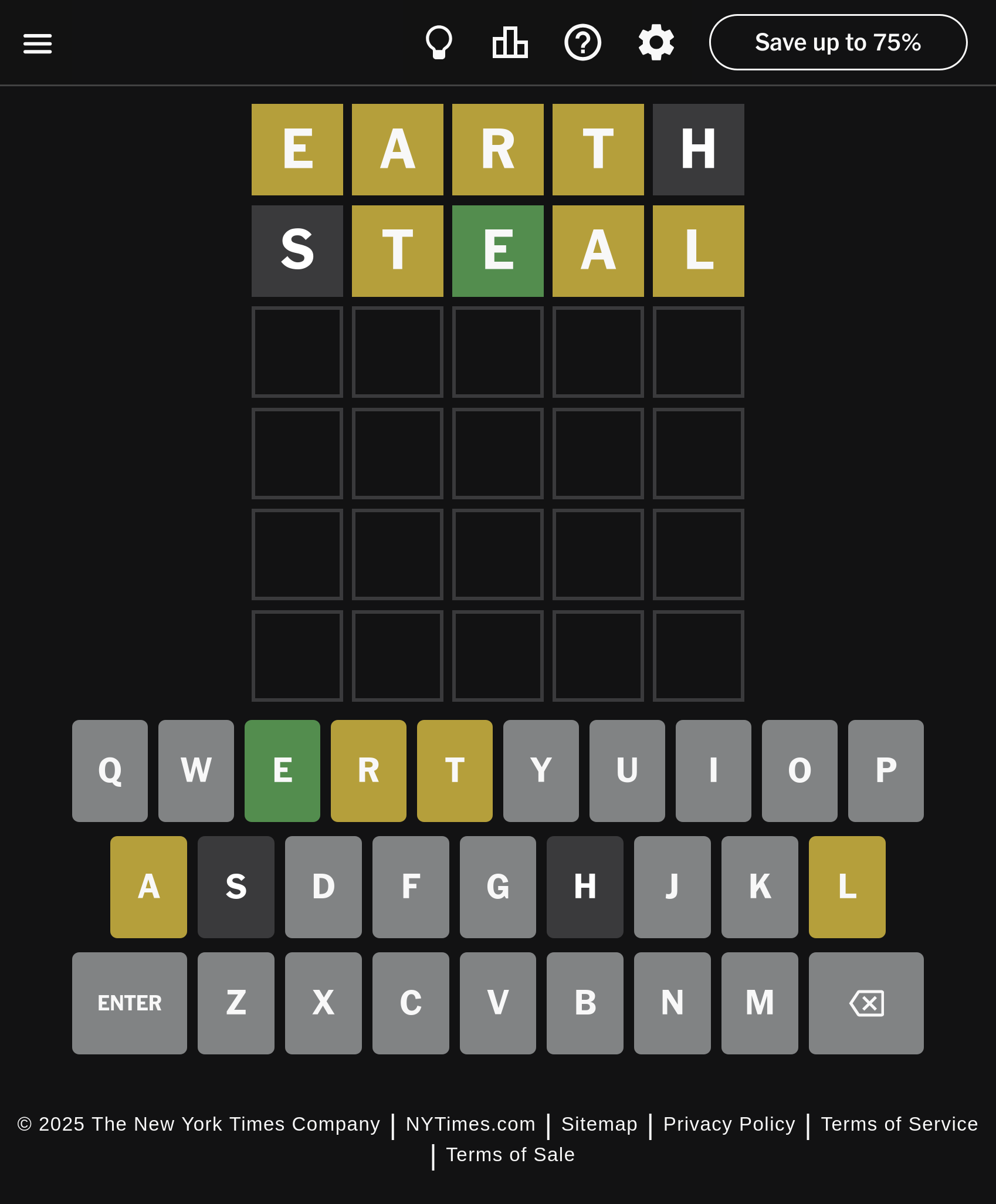

But, the web is big and complex and there will always be cases where forced colors will not work. For example, take this Wordle puzzle:

The gold tiles indicate letters that are in the hidden word, and gold tiles represent letters that are in the word and in the right place in the word.

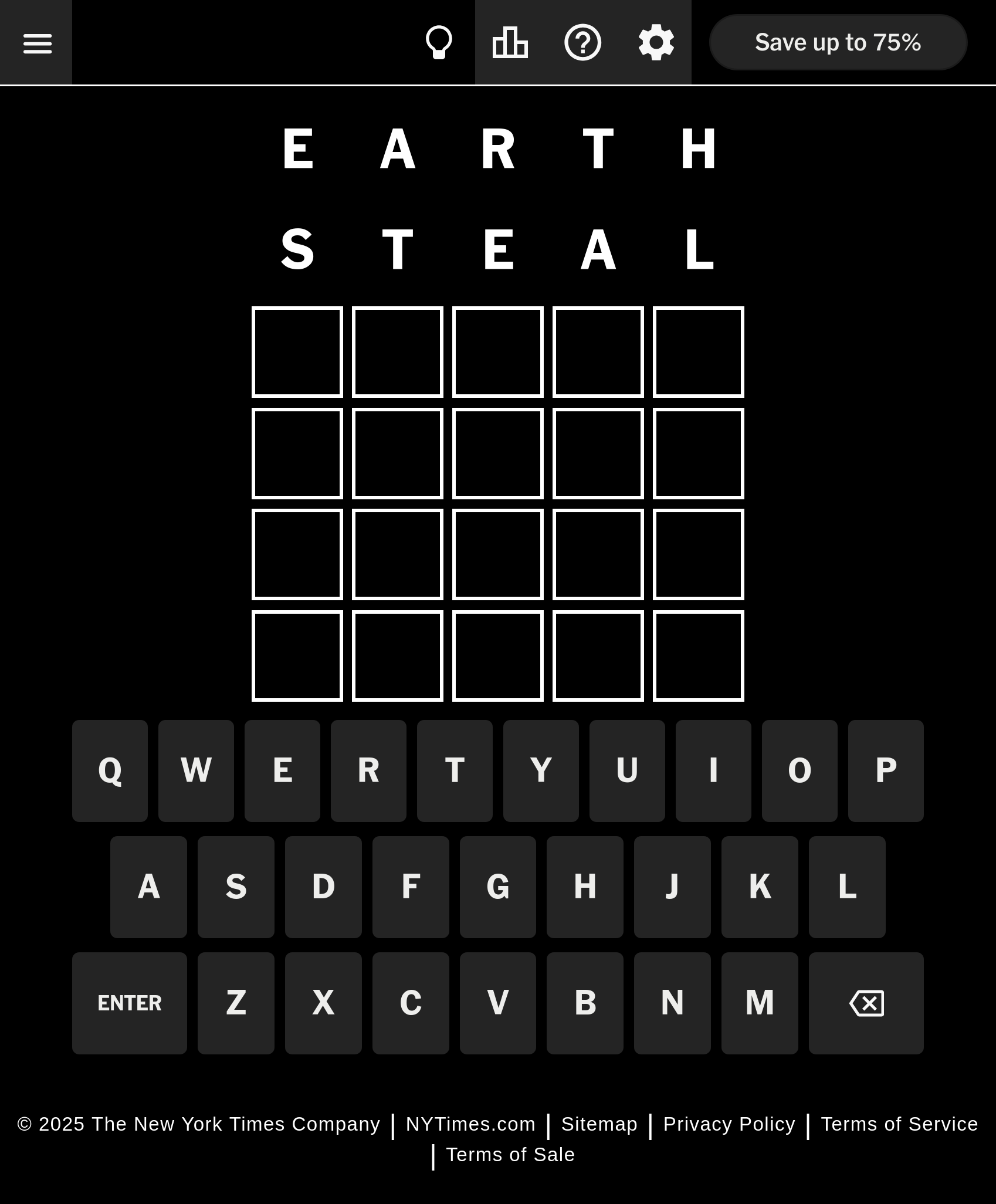

When a user is in forced colors mode, the background of the tiles disappears and the puzzle becomes unsolvable:

What More Can We Expect?

The work we did to add contrast control to the settings is a first step in allowing a user to quickly configure their web browser experience in order to adapt to their current uses. Just like text zoom, there is no one-size-fits-all. We will be working to allow users to easily adjust their color contrast settings as they are browsing and using the web.

A New Speech API and Framework

I wrote the beginning of what I hope will be an appealing speech API for desktop Linux and beyond. It consists of two parts, a speech provider interface specification and a client library. My hope is that the simplicity of the design and its leverage of existing free desktop technologies will make adoption of this API easy.

Of course, Linux already has a speech framework in the form of Speech Dispatcher. I believe there have been a handful of technologies and recent developments in the free desktop space that offer a unique opportunity to build something truly special. They include:

D-Bus

D-Bus came about several years after Speech Dispatcher. It is worth pausing and thinking about the different architectural similarities between a local speech service and a desktop IPC bus. The problems that Speech Dispatcher tackles, such as auto-spawning, wire protocols, IPC transports, session persistence, modularity, and others have been generalized by D-Bus.

Instead of a specialized module for Speech Dispatcher, what if speech engines just exposed an interface on the session bus? With a service file they can automatically spawn and go away as needed.

Flatpak (and Snap??)

Flatpak offers a standardized packaging format that can encapsulate complex setups into a sandboxed installation with little to no thoughts of the dependency hell Linux users have grown accustomed to. One neat feature in Flatpaks is that they support exposing fully sandboxed D-Bus services, such as a speech engine. Flatpaks offer an out-of-band distribution model that sidesteps the limitations and fragmentation of traditional distro package streams. Flatpak repositories like Flathub are the perfect vehicle for speech engines because of the mix of proprietary and peculiar licenses that are often associated with them, for example…

Neural text to speech

I have always been frustrated with the lack of naturally sounding speech synthesis in free software. It always seemed that the game was rigged and only the big tech platforms would be able to afford to distribute nice sounding voices. This is all quickly changing with a flurry of new speech systems covering many languages. It is very exciting to see this happening, it seems like there is a new innovation on this front every day. Because of the size of some of the speech models, and because of the eclectic copyright associated with them we can’t expect distros to preinstall them, Flatpaks and Neural speech systems are a perfect match for this purpose.

Talking apps that aren’t screen readers

In recent years we have seen many new applications of speech synthesis entering the mainstream - navigation apps, e-book readers, personal assistants and smart speakers. When Speech Dispatcher was first designed, its primary audience was blind Linux users. As the use cases have ballooned so has the demand for a more generalized framework that will cater to a diverse set of users.

There is precedent for technology that was designed for disabled people becoming mainstream. Everyone benefits when a niche technology becomes conventional, especially those who depend on it most.

Questions and Answers

I’m sure you have questions, I have some answers. So now we will play our two roles, you the perplexed skeptic, unsure about why another software stack is needed, and me - a benevolent guide who can anticipate your questions.

Why are you starting from scratch? Can’t you improve Speech Dispatcher?

Speech Dispatcher is over 20 years old. Of course, that isn’t a reason to replace it. After all, some of your favorite apps are even older. Perhaps there is room for incremental improvements in Speech Dispatcher. But, as I wrote above, I believe there are several developments in recent years that offer an opportunity for a clean slate.

I love eSpeak, what is all this talk about “naturally sounding” voices?

eSpeak isn’t going anywhere. It has a permissible license, is very responsive, and is ergonomic for screen reader users who consume speech at high rates for long periods of time. We will have an eSpeak speech provider in this new framework.

Many other users, who rely on speech for narration or virtual assistants will prefer a more natural voice. The goal is to make those speech engines available and easy to install.

I know for a fact that you can use /insert speech engine/ with Speech Dispatcher

It is true that with enough effort you can plug anything into Speech Dispatcher.

Speech Dispatcher depends on a fraught set of configuration files, scripts, executables and shared libraries. A user who wants to use a synthesis engine other than the default bundled one in their distro needs to open a terminal, carefully place resources in the right place and edit configuration files.

What plan do you have to migrate all the current applications that rely on Speech Dispatcher?

I don’t. Both APIs can coexist. I’m not a contributor or maintainer of Speech Dispatcher. There might always be a need for the unique features in Speech Dispatcher, and it might have another 20 years of service ahead.

I couldn’t help but notice you chose to write libspiel in C instead of a modern memory safe language with a strong ownership model like Rust.

Yes.

Half of the DOM Web Speech API deals with speech synthesis. There is a method called speechSynthesis.getVoices that returns a list of all the supported voices in the given browser. Your website can use it to choose a nice voice to use, or present a menu to the user for them to choose.

The one tricky thing about the getVoices() method is that the underlying implementation will usually not have a list of voices ready when first called. Since speech synthesis is not a commonly used API, most browsers will initialize their speech synthesis lazily in the background when a speechSynthesis method is first called. If that method is getVoices() the first time it is called it will return an empty list. So what will conventional wisdom have you do? Something like this:

function getVoices() {

let voices = speechSynthesis.getVoices();

while (!voices.length) {

voices = speechSynthesis.getVoices()

}

return voices;

}

If synthesis is indeed not initialized and first returns an empty list, the page will hang in an infinite CPU-bound loop. This is because the loop is monopolizing the main thread and not allowing synthesis to initialize. Also, an empty voice list is a valid value! For example, Chrome does not have speech synthesis enabled on Linux and will always return an empty list.

So, to get this working we need to not block the main thread by making asynchronous calls to getVoices, we should also have a limit on how many times we attempt to call getVoices() before giving up, in the case where there are indeed no voices:

async function getVoices() {

let voices = speechSynthesis.getVoices();

for (let attempts = 0; attempts < 100; attempts++) {

if (voices.length) {

break;

}

await new Promise(r => requestAnimationFrame(r));

voices = speechSynthesis.getVoices();

}

return voices;

}

But that method still polls, which isn’t great and is needlessly wasteful. There is another way to do it. You could rely on the voiceschanged DOM event that will be fired once synthesis voices become available. We will also add a timeout to that so our async method returns even if the browser never fires that event.

async function getVoices() {

const GET_VOICES_TIMEOUT = 2000; // two second timeout

let voices = window.speechSynthesis.getVoices();

if (voices.length) {

return voices;

}

let voiceschanged = new Promise(

r => speechSynthesis.addEventListener(

"voiceschanged", r, { once: true }));

let timeout = new Promise(r => setTimeout(r, GET_VOICES_TIMEOUT));

// whatever happens first, a voiceschanged event or a timeout.

await Promise.race([voiceschanged, timeout]);

return window.speechSynthesis.getVoices();

}

You’re welcome, Internet!