When we first made Firefox accessible for Android, the majority of our users were using Gingerbread. Accessibility in Android was in its infancy, and certain things we take for granted in mobile screen readers were not possible.

For example, swiping and explore by touch were not available in TalkBack, the Android screen reader. The primary mode of interaction was with the directional keys of the phone, and the only accessibility event was “focus”.

The bundled Android apps were hardly accessible, so it was a challenge to make a mainstream, full featured, web browser accessible to blind users. Firefox for Android at the time was undergoing a major overhaul, so it was a good time to put some work into our own screen reader support.

We were governed by two principals when we designed our Android accessibility solution:

- Integrate with the platform, even if it is imperfect. Don’t require the user to learn anything new. The screen reader was less than intuitive. If the user jumped through the hoops to learn how to use the directional pad, they endured enough. Don’t force them through additional steps. Don’t make them install addons or change additional settings.

- Introduce new interaction modes through progressive enhancements. As long as the user could use the d-pad, we could introduce other features that may make users even happier. There are a number of examples of where we did this:

- As early as our gingerbread support, we had keyboard quick navigation support. Did your phone have a keyboard? If so, you could press “h” to jump to the next heading instead of arrowing all the way down.

- When Ice Cream Sandwich introduced explore by touch, we added swipe left/right to get to previous or next items.

- We also added 3 finger swipe gestures to do quick navigation between element types. This feature got mixed feedback: It is hard to swipe with 3 fingers horizontally on a 3.5″ phone.

It was a real source of pride to have the most accessible and blind-friendly browser on Android. Since our initial Android support our focus has gone elsewhere while we continued to maintain our offering. In the meantime, Google has upped its game. Android has gotten a lot more sophisticated on the accessibility front, and Chrome integrated much better with TalkBack (I would like to believe we inspired them).

Now that Android has good web accessibility support, it is time that we integrate with those features to offer our users the seamless experience they expect. In tomorrows Nightly, you will see a few improvements:

- TalkBack users could pinch-zoom the web content with three fingers, just like they could on Chrome (bug 1019432).

- The TalkBack local context menu has more options that users expect with web content, like section, list, and control navigation modes (bug 1182222). I am proud of our section quick nav mode, I think it will prove to be pretty useful.

- We integrate much better with the system cursor highlight rectangle (bug 1182214).

- The TalkBack scroll gesture works as expected. Also, range widgets can be adjusted with the same gesture (bug 1182208).

- Improved BrailleBack support (bug 1203697).

That’s it, for now! We hope to prove our commitment to accessibility as Firefox branches out to other platforms. Expect more to come.

Every committed Mozillian and many enthusiastic end-users will use a pre-release version of Firefox.

In Mac and Windows this is pretty straightforward, you simply download the Firefox Nightly/Aurora/Beta dmg or setup tool, and get going. When it is installed it is a proper desktop application, you could make it your default browser, and life goes on.

In Linux, we rely much more on packagers to prepare an application for the distribution before we could use it. This usually works really well, but sometimes you really just want to use an upstream app without any gatekeepers.

The pre-release versions of Firefox for Linux comes in tarballs. You unpack them, and could run them out of the unpacked directory. But it doesn’t run well. You can’t set them as your default browser, the icon is a generic square, and opening links from other apps is a headache. In short, it’s a less than polished experience.

So here is a small script I wrote, it does a few things:

- It downloads the latest Firefox from the channel of your choosing.

- It unpacks it into a hidden directory in your $HOME

- It adds a symbolic link to the main executable in

~/.local/bin .

- It adds symbolic links for the icon’s various sizes into your icon theme in

~/.local/share/icons.

- It adds a desktop file to

~/.local/share/applications.

It doesn’t require root privileges, and is contained to your home directory so it won’t conflict with the system Firefox installation or touch the system libxul. Typically, you only need to run the script once per channel. After a channel is installed, they will get automatic updates through the actual app.

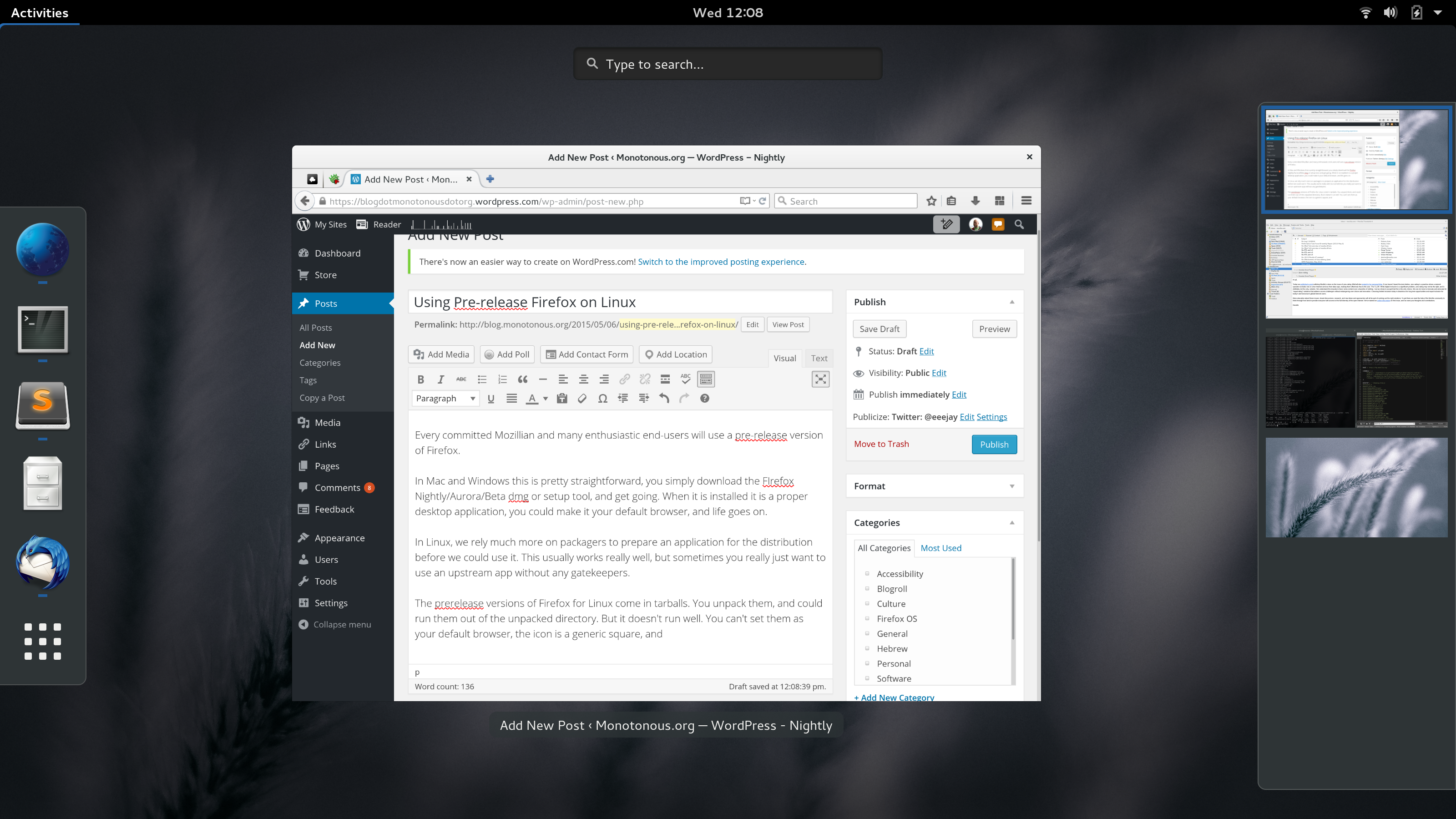

[ ](/assets/uploads/2015/05/screenshot-from-2015-05-06-12-08-51.png)See the nice icon?

](/assets/uploads/2015/05/screenshot-from-2015-05-06-12-08-51.png)See the nice icon?

So, here are some commands you could copy to your terminal and have pre-release Firefox installed:

Nightly

curl https://raw.githubusercontent.com/eeejay/foxlocal/master/foxlocal.py | python - nightly

Aurora

curl https://raw.githubusercontent.com/eeejay/foxlocal/master/foxlocal.py | python - aurora

Beta

curl https://raw.githubusercontent.com/eeejay/foxlocal/master/foxlocal.py | python - beta

Release

curl https://raw.githubusercontent.com/eeejay/foxlocal/master/foxlocal.py | python - release

Now that eSpeak runs pretty well in JS, it is time for a Web Speech API extension!

What is the Web Speech API? It gives any website access to speech synthesis (and recognition) functionality, Chrome and Safari already have this built-in. This extension adds speech synthesis support in Firefox, and adds eSpeak voices.

For the record, we had speech synthesis support in Gecko for about 2 years. It was introduced for accessibility needs in Firefox OS, now it is time to make sure it is supported on desktop as well.

Why an extension instead of built-in support? A few reasons:

- An addon will provide speech synthesis to Firefox now as we implement built-in platform-specific solutions for future releases.

- An addon will allow us to surface current bugs both in our Speech API implementation, and in the spec.

- We designed our speech synthesis implementation to be extensible with addons, this is a good proof of concept.

- People are passionate about eSpeak. Some people love it, some people don’t.

So now I will shut up, and let eSpeak do the talking:

td;dr

Look! A flashy demo with buttons!

Background

A long time ago, we were investigating a way to expose text-to-speech functionality on the web. This was long before the Web Speech API was drafted, and it wasn’t yet clear what this kind of feature would look like. Alon Zakai stepped up, and proposed porting eSpeak to Javascript with Emscripten. This was a provocative idea: was our platform powerful enough to support speech synthesis purely in JS? Alon got back a few days later with a working demo, the answer was “yes”.

While the speak.js port was very impressive, it didn’t answer many of our practical needs. For example, the latency was not good enough for making a responsive UI, you could wait more than a couple of seconds to hear a short phrase. In addition, the longer the text you wanted to synthesize, the longer you needed to wait.

It proved a concept, but there were missing pieces we didn’t have four years ago. Today, we live in the future of 2011, and things that were theoretical then, are possible now (in the future).

asm.js

Today, Emscripten will compile C/C++ code into a subset of Javascript called asm.js. This subset is optimized on all current browsers, and allows performance to be about 2x native. That is really good. eSpeak is a pretty lightweight library already, the extra performance boost of asm.js makes speech instantaneous.

Transferable Objects

Passing data between a web worker and a parent process used to mean a lot of copying, since the worker doesn’t share memory with the parent process. But today, you can transfer ownership of ArrayBuffers with zero copying. When the web worker is ready to send audio data back to the calling process, it could do so while maintaining a single copy of the audio buffer.

Web Audio API

We have a slick, full featured Audio API today on the web. When speak.js came out in 2011, it used a prefixed method on an <audio> element to write PCM data to. Today, we have a proper API that enables us to take the audio data and send it through an elaborate pipeline of filters and mixers, or even send it into the ether with WebRTC.

Emscripten Got Fancy

This was my first time playing with it, so I am not sure what was available in 2011. But, if I have to guess, it was not as powerful and fun to work with. Emscripten’s new WebIDL support makes adding bindings extremely easy. You still get a chance to do some pointer arithmetic, but that’s supposed to be fun. Right?

So here is eSpeak.js!

I wanted to do a real API port, as opposed to simply porting a command line program that takes input and writes a WAV file. Why? two main reasons:

- eSpeak can progressively synthesize speech. If you provide a callback to espeak_Synth(), it will be called repeatedly with as many samples as you defined in the buffer size. It doesn’t matter how long the text is that you want synthesized, it will fill the buffer and return it to you immediately. This allows for a consistent low latency from the moment you call espeak_Synth(), until you could start playing audio.

- eSpeak supports events. If you use a callback, you get access to a list of events that provide a timestamp in the audio, and the type of event that occurs there, such as word or sentence boundaries.

And, of course, with all the recent-ish platform improvements above, I was really time for a fresh attempt.

Future Work

- Break up the data files. Right now, eSpeak.js is over a 2MB download. That’s because I packaged all the eSpeak data files indiscriminately. There may be a few bits that are redundant. On the flip side you get all 99 voice/language combinations (that’s a good deal for 2MB, eh?). It would be cool to break it up to a few data files and allow the developer to choose which voices to bundle or, even better, just grab them on demand.

- Make a demo of the speech events. It makes my head hurt to think about how to do something compelling. But it is a neat feature that should somehow be shown.

- ScriptProcessorNode is apparently deprecated. This is going to need to be ported to an AudioWorker once that is widely implemented.

I’m done apologizing, here is the demo.